Large Language Models (LLMs) such as GPT-3, GPT-4, LLaMA and PaLM are increasingly integrated into cybersecurity workflows for tasks ranging from phishing detection and log analysis to malware classification and threat intelligence genera- tion. At the same time, these models introduce new risks, including hallucinations, model-stealing attacks, prompt injection, and data leakage. This study conducts a Systematic Literature Review (SLR) of academic and industrial research on the applications and challenges of LLMs in cybersecurity between 2018 and 2024, follow- ing the PRISMA methodology. A total of 100 highly relevant and influential papers were selected after multi-stage identification, screening, eligibility assessment, and inclusion. The results show that defensive applications such as phishing and spam detection, log and incident analysis, and policy generation dominate current re- search, while offensive uses and LLM-specific vulnerabilities are gaining attention. Although LLM-based systems can achieve high accuracy in text-based detection tasks, their effectiveness is lower for network traffic analysis and real-time intrusion detection. The review highlights key technical, ethical, and legal challenges and identifies research gaps, especially regarding domain-specific LLMs and robust secu- rity evaluation. The findings provide guidance for researchers and practitioners on how to responsibly leverage LLMs in cybersecurity operations.

Публикации

Похожие материалы

- Research paper on a topic: Is it wisely to follow all Kazakh traditions in a modern world?

- The farming and environment. Is agriculture and cattle more dangerous than useful?

- Логарифмы

- Innovation technology

- Word lists for Spelling Bee Contest

- Nuclear energy and radiation: salvation or health risk?

- The best strategies to prepare for IELTS

- Is it possible to combine orphanages and nursing homes in Kazakhstan?

- Развитие логического мышления на уроках математики в начальной школе

- Internal computer devices

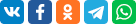

Наши олимпиады